Neural Scaling Laws: Bao Ng's Insights Unveiled

Neural Scaling Laws have emerged as a pivotal concept in the field of artificial intelligence, offering insights into how model performance scales with data, compute, and parameters. Bao Ng, a renowned researcher, has contributed significantly to this area, unveiling groundbreaking findings that reshape our understanding of AI scalability. This blog delves into Bao Ng's insights, exploring their implications for both informational and commercial audiences, neural scaling laws, AI scalability, machine learning advancements.

Understanding Neural Scaling Laws

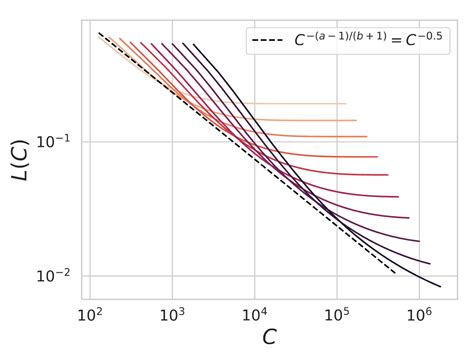

Neural Scaling Laws refer to the empirical relationships between a model’s performance, the amount of data it’s trained on, the computational resources used, and its parameter count. Bao Ng’s research highlights how these factors interact to influence model efficiency and accuracy, deep learning models, AI research trends.

Key Components of Neural Scaling Laws

- Data Scaling: How model performance improves with more training data.

- Compute Scaling: The impact of increased computational power on model training.

- Parameter Scaling: The relationship between model size and performance.

Bao Ng’s Contributions to Neural Scaling Laws

Bao Ng’s work has provided critical insights into optimizing AI models by identifying efficient scaling strategies. His research emphasizes the importance of balancing data, compute, and parameters to achieve optimal performance, AI optimization techniques, machine learning efficiency.

Notable Findings by Bao Ng

| Finding | Implication |

|---|---|

| Diminishing returns in data scaling | Beyond a certain point, adding more data yields minimal performance gains. |

| Optimal compute-to-parameter ratio | Identifying the ideal balance between computational resources and model size. |

| Cross-architecture scalability | Insights applicable across different neural network architectures. |

Practical Applications of Neural Scaling Laws

For commercial audiences, understanding neural scaling laws is crucial for cost-effective AI deployment. Bao Ng’s insights help businesses optimize resource allocation, ensuring maximum ROI on AI investments, AI cost optimization, business AI strategies.

Checklist for Applying Neural Scaling Laws

- Assess current data, compute, and parameter usage.

- Identify bottlenecks in model performance.

- Implement scaling strategies based on Bao Ng’s findings.

- Monitor performance improvements and adjust accordingly.

💡 Note: When applying neural scaling laws, consider the specific requirements of your AI project to avoid over-optimization or underutilization of resources.

Bao Ng's insights into neural scaling laws provide a roadmap for enhancing AI model performance efficiently. By understanding the interplay between data, compute, and parameters, both informational and commercial audiences can leverage these findings to advance their AI initiatives, neural scaling laws, AI scalability, machine learning advancements.

What are Neural Scaling Laws?

+Neural Scaling Laws describe how AI model performance scales with changes in data, compute, and parameters, providing insights into efficient model optimization, deep learning models, AI research trends.

How do Bao Ng’s findings impact AI development?

+Bao Ng’s research helps developers identify optimal scaling strategies, reducing costs and improving model efficiency, AI optimization techniques, machine learning efficiency.

Can Neural Scaling Laws be applied to any AI model?

+Yes, Bao Ng’s insights are applicable across various neural network architectures, making them versatile for different AI applications, cross-architecture scalability, AI model versatility.